SEO Wisdoms

Or: how to get your data to be found on the internet…

Intro

So… at the office we were moving from one web mail/storage system to another (too bad, not getting any better), and we had to go through our old documents to determine what to keep.

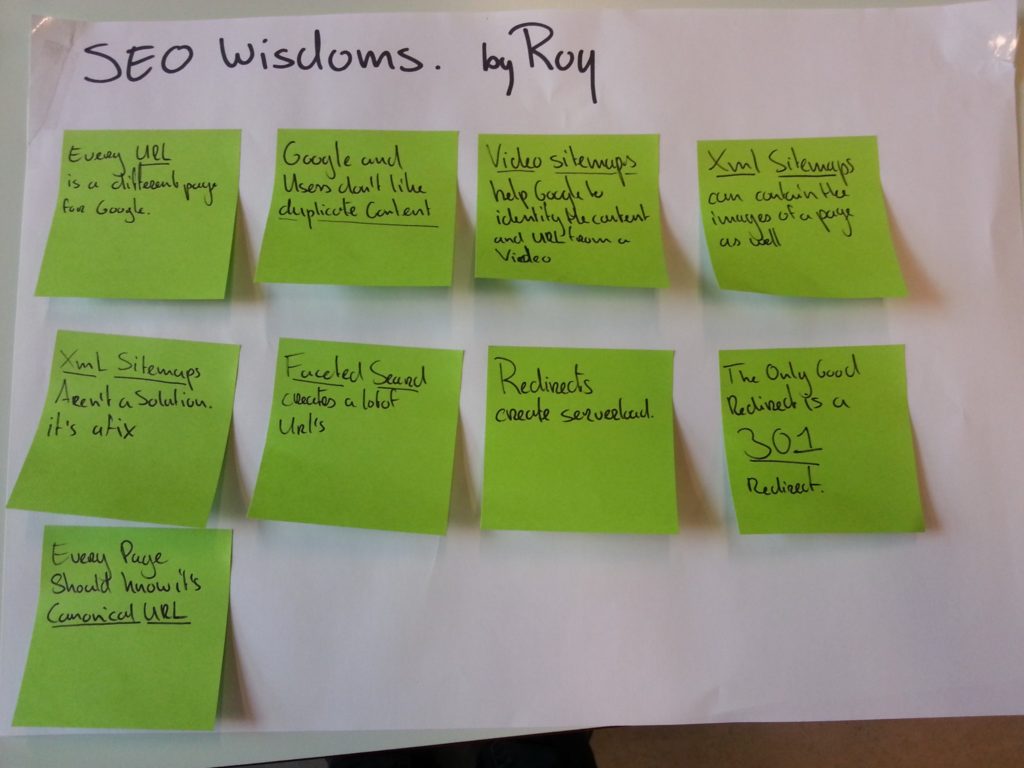

And in the pile of stuff to check, there was a nice photo of some Search Engine Optimization (SEO) rules which will (probably?) never get old. We did have these rules up on the wall, next to our scrum board on the project at that time. Disclaimer; it’s an old photo, taken in June 2013 😉

Note: this is by no means an attempt to teach SEO to anyone, it’s merely a simple reminder to my self, in case I do need some SEO in the future again. And in the off-chance that it helps someone else out, that’s fine; win-win.

Let’s go over them one by one, and add my interpretation / opinion / memories (from 6 years ago). My guess is that they were not in any particular order. And where you see “Google“, just fill in your search engine name of choice (e.g. duckduckgo, bing, or any other);

(1) Every URL is a different page for Google

Well, this seems to be an important base and recurring theme in these rules. If you do not take care of rule (8) and (9), you will suffer from rules (2), (6), and possibly (7). The URL (the content of the address bar line from your web browser), is a pointer to your page. Google collect’s these pointers and the content connected to them, to present as the search results. You can compare the URL’s to the page numbers in a book. Google will try to read all the pages in the book, and show them to the users if a search term fits the page content.

(2) Google and Users don’t like duplicate content

Following on rule (1), you can imagine that if you serve different URL’s, but with the same content, that Google will show all of these duplicate results when the search query matches. Users don’t like this, because you only want to be told once what has been found for your search. Google doesn’t like this also (or actually Google does not mind, it’s just punishing the web site owner). What Google does, is give so called rankings to search results. Chances are that your duplicate hit’s will get each only part of the ranking, to make sure you are not getting higher in the search result by just repeating your pages (like spam). Effectively lowering your page’s ranking. It will be higher for unique content. Or… if it’s a duplicate you really need to be there for technical reasons, you can use rule (9) to fix this.

(3) Video sitemaps; help Google to identify the content and URL from a video.

Not much to say about this one. A site map is a technical (XML) file, containing a list of URL’s (and some meta data per URL) for your web site. Google will go and read all the page for these URL’s, and make them available for searches. This way it might do some quicker indexing of your website, as it does not have to follow all links on all pages first. And it makes it possible to index pages for which there are no links to the page at all (although I do expect these non-linked pages might get a bit lower ranking than page which ARE linked to from within your site). Also, if memory serves me right, you can indicate to Google what the page change frequency is (per URL), to allow for more frequent scanning (indexing) of pages which get updated more often (for example your homepage).

(4) XML sitemaps; can contain the images of a page as well.

Similar to (3). Where number (3) is about video’s on your site, the same counts for non video pages. And these pages also might have a featured image for example.

(5) XML sitemaps; Aren’t a solution. It’s a fix.

Right… what did Roy mean by this again…? Hmmm… Don’t remember. The only thing I can think of, is the fact that if your website navigation is less than optimal (sucks), you can try to be found/indexed by providing a sitemap. But… these pages will not be ranked as high as could be. If you do have a well thought of, clear way of navigating your site content, using proper menu structures, you will get points from Google for the linked pages. The ones to be reached the easiest will get higher ranks as the ones which are more hidden behind multiple layers of navigation. Google even goes as far as honoring the top ranked menu items by a nice navigation menu in the search result as well.

(6) Faceted Search; creates a lot of (duplicate) URL’s.

Faceted search is a way to navigate / filter through your search results on your website. It is providing views (multiple URL’s) to the SAME content in many different ways. As stated before, you do not want to get these results indexed as duplicates. For example when searching for pasta recipes, you can end up on the same result by filtering on course (Main), or filtering on country of origin / kitchen (Italian), or even with a combination of filters. Use (9) to get rid of duplicates, or even let Google know NOT to index the facet combinations.

(7) Redirects; create server load.

This one, I only partially agree upon. My guess is that Roy did have some old website(s) in mind, which had to do some heavy querying to some databases, to find out for old URL’s what the new replacement destination should be. On our projects we did manage to use quite fast look-ups for these kind of relocations, and in that case the additional server load is really minor, nothing to show up in the system load. OK, it does create some load, but nothing we can’t handle.

(8) The only good redirect is a 301 redirect.

Ah, yes… this might be true with respect to SEO and ranking and de-duplicating Google content. So you probably should follow this tip. However, keep in mind that a 301 (permanent move) is CACHED for a long long time in the browsers of the end users. So make damn sure that you do not make mistakes in the 301 destination, and definitely make sure the destination is not a dynamic one (for example pointing to your latest article, every day a new one). You have NO WAY of getting rid of the cached 301 at the user’s end. What you could try, is adding an expire header at the same time as sending the 301, but I’m not sure it’s honoured by the browsers.

The effect of a 301 is that Google will update it’s index from the old URL to the new URL, and transfer the old page ranking to the new page (if the page has similar content I guess).

If you would use a 302 (temporary move), then Google will not do this kind of housekeeping, sometimes leading to duplicate search results, or loss of ranking.

The advantage of a 302 is of course that it’s not cached by the end users browser, so it’s as the name indicates a temporary thing, which can change at any time.

When redirecting, please try making an effort to redirect to the proper destination in ONE GO. We did have occasions in the past where we did multiple moves of pages, leading to redirects pointing to redirects pointing to redirects pointing to… you get the point 😉 Even though the end user might not really notice (if the redirect chain does end), it might cause a delay for Google in spidering / crawling your site. It might decide to just follow one redirect a a time, and put the new URL at the bottom of the crawler queue. Causing your page to be indexed much later than expected.

(9) Every page should know it’s canonical URL.

Ok, so you DO have duplicate content on your site. Either by having faceted search, or having a bit of personalized content as part of a page, or some other technical or functional reason for which you did have to add parameters to your URL which make them point to the same page, regardless of having different values in the parameters. Or for example having a parameter indicating the source of the link to the page (like from an add, or from an affiliate link). In that case your site will store the value in your database for reporting, but the user will see the same page in all cases.

Now how to fix this?

Simple; add a bit of meta data in the header of the page, indicating what the “normal” or “base” URL of the page is. This is called the “Canonical URL”.

Google will read this canonical URL, and make sure that all duplicate pages which have the same canonical will end up as a single search result, with the combined page ranking. In other words, Google will see all these pages as one and the same page, just as your human visitor will see.

End of rules – any last thoughts?

Apart from the bit about canonical’s fixing your duplicate content, you can also just prevent Google from indexing (duplicate) stuff. You can do this through URL patterns in a robots.txt file in the root of your website, or by adding a noindex meta tag in the html response, or by adding a special response header in the http protocol response.

You can also instruct search engines what links to follow (spider), and which ones not to follow. This might also be quite helpful.

Removing obsolete pages from your site might help also. This might improve the ranking of the pages which are not obsolete (think of the expression “can’t see the forest for the trees“).

Do not try to trick Google (let it index the same stuff the users do see). They might give you penalties for abuse (not sure if this is actually true, might just be hearsay).

When search-ability is important, hire an expert if you can afford it…

And did you know there are special markup tags / machine readable metadata info elements you can put in your page, which tell google what kind of data you are showing? For example recipe attributes, product attributes, review attributes, book attributes, etc… You will get extra rewards for these by having nicely formatted search results for these kind of topics (see rdf/microdata specifications on schema.org).

As mentioned before; having a good – consistent – site navigation is also important. Make sure the important pages are just a click away from the user, and the less important ones more than a click. This way Google will rank them accordingly, and perhaps even try to simulate showing your site navigation menu (if it’s considered consistent). Note: I just tried to get a screen print of such a menu in a search result, but could not find any. Did they get rid of that feature? Or… perhaps we did break that feature by having a less than optimal navigation nowadays (on the site I looked at). It did work in the past. Oops?

The 9-rule source is a SEO expert you can safely hire for your projects (here in the Netherlands). He is really good in this stuff, and has worked with many large companies to get the most out of the internet as possible. You can find him over here – www.chapter42.com (Roy Huiskes). To Roy; Just in case you find this article by accident, thanks for your time and input at our projects! 😉

Thijs.

2 thoughts on “SEO Wisdoms”

Haha lovely, those were the good days! Funny thing is most of them are as useful today as they were in june 2013.

And about the redirects: some developers put all their redirects in an .htaccess. With over 25k redirects, that will be more heavy on the server, and of course if it isn’t an SPA it will be more webserver requests in most of the systems.

Thanks for the tips. Read it all!