Fluent Bit Elastic Search Data Type Filter

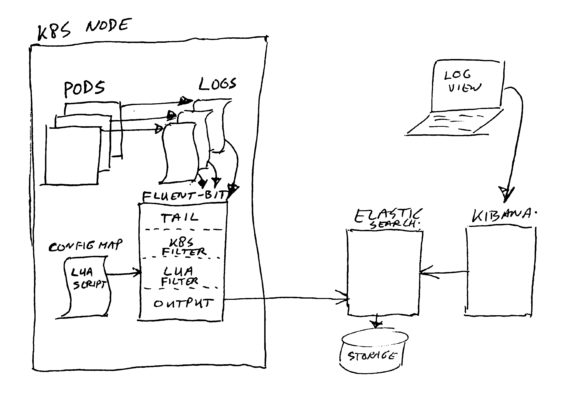

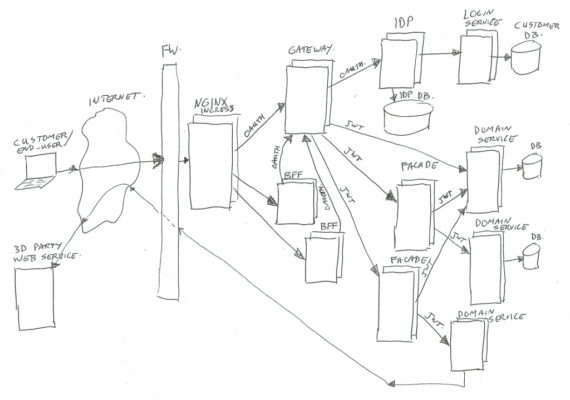

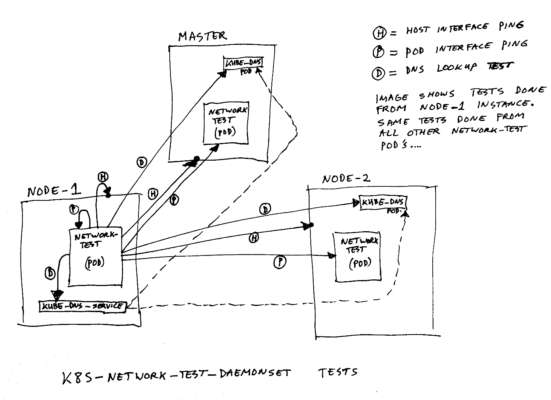

Summary I have created a Fluent Bit LUA Filter to force proper data types to be send to our Elastic Search (log collector) Database, to prevent Elastic from rejecting the log records. GitHub Project: https://github.com/atkaper/fluent-bit-lua-filter-elastic-data-types Environment We are running a bunch of (on premise) Kubernetes clusters, all having around 20 nodes, with many deployments on it. The deployments use different technologies; Java, Kotlin, Node.js, GO, Python, C, … and quite some standard open source applications. Most of them have been…